Bias In, Bias Out

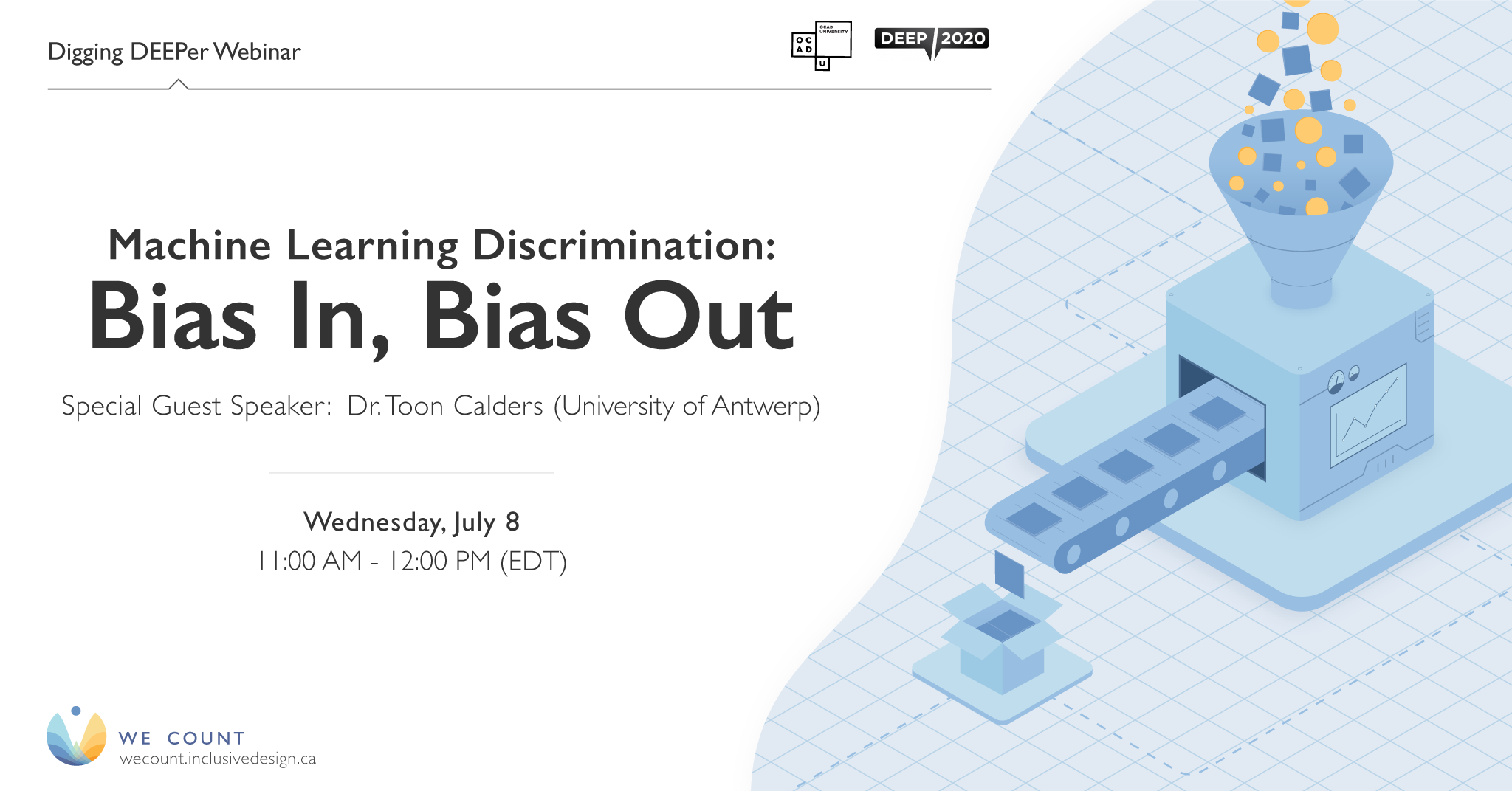

Machine Learning Discrimination: Bias In, Bias Out

Featuring Dr. Toon Calders

July 8, 2020, 11:00 AM – 12:30 PM (EST)

Artificial intelligence is more and more responsible for decisions that have a huge impact on our lives. But predictions made using data mining and algorithms can affect population subgroups differently. Academic researchers and journalists have shown that decisions taken by predictive algorithms sometimes lead to biased outcomes, reproducing inequalities already present in society. Is it possible to make a fairness-aware data mining process? Are algorithms biased because people are too? Or is it how machine learning works at the most fundamental level?